Prior to launching the survey, researchers conducted a series of preliminary tests to determine how best to ask Americans to classify news-related statements. The purpose of these tests was to analyze the effects of changes in the language used in the question instructions and response options, the number of response options and the attributions of the statements to different news outlets. These tests were not intended to be representative of the U.S. adult population.

To explore different question wording options, a series of tests were conducted using SurveyMonkey’s online nonprobability panel. The sample size for each test ranged from 76 to 232 respondents. Each test included five to 22 news-related statements, a number of which were used in the final questionnaire. In addition to asking respondents to classify statements, each test included one of three open-ended questions, asking for challenges they had in classifying the statements, their general experience with the survey, or how they defined “facts” and “opinions.”

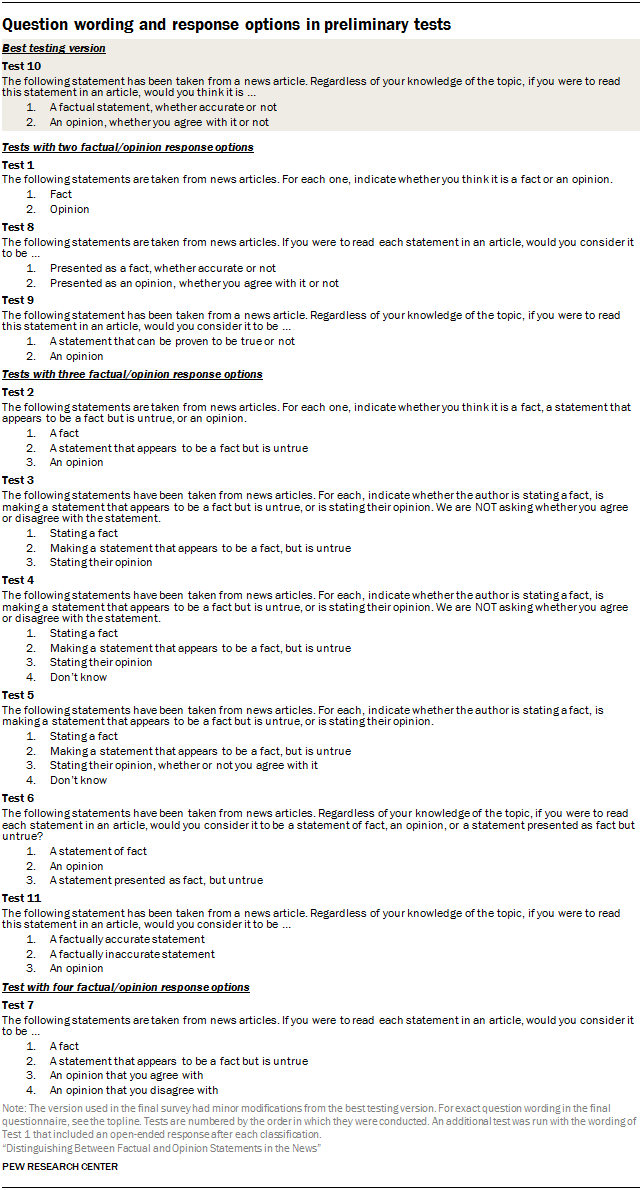

The table below provides the question instruction wording used with the statements included in each test.

Determining the level of explanatory language

The best testing version (test 10 in the table) included explanatory language in both the question instructions and the response options. Test results suggested that this added language helped people better understand the task, alleviated pressure to have prior knowledge on the statement’s topic, and provided some guidance in what “factual statements” and “opinion statements” referenced.

Initial tests included no explanatory language, which, according to open-end responses, led to confusion among a number of respondents. (See the question wording in tests 1 and 2.) Some interpreted the exercise as either a knowledge quiz – in which they assessed the accuracy of the statement – or an evaluation of whether they agreed or disagreed with the statement.

The added language to the factual (tests 2 through 11) and opinion response options (tests 5, 7, 8 and 10) more clearly specified the task. Additionally, some tests (tests 3 and 4) experimented with adding a sentence, “We are NOT asking whether you agree or disagree with the statement,” in the question instructions, but tests with that addition did not perform better. Language was also added to the question instructions to lessen the cognitive difficulty respondents may have had when classifying statements (language such as “Regardless of your knowledge of the topic…” was added in tests 6, 9, 10 and 11).

Taken together, there was little evidence of confusion over what the question was asking in the best testing version.

Varying the number of response options

While the best testing version (test 10) included two response options – a factual statement, whether accurate or not, and an opinion, whether you agree with it or not – these tests also explored versions with three and four factual/opinion response options.

Tests with three response options (tests 2 through 6 and 11) included one opinion option and two factual options: an accurate factual statement and an inaccurate factual statement. While this deterred people from categorizing statements that they perceived to be inaccurate as opinions, the imbalance of factual and opinion options substantially decreased the likelihood of someone selecting the single opinion option.

The four response options (test 7), in which there were two factual options (accurate and inaccurate) and two opinion options (opinion you agree with and opinion you disagree with) made the task more difficult. Respondents had to make multiple classifications at once (factual vs. opinion and either accurate/inaccurate or agree/disagree), which resulted in an increase in item nonresponse.

Attributing sources to news statements

Additional tests were conducted (using language and response options from tests 2, 8, 10 and 11) which layered on attributions of news outlets to the statements. In each test, a respondent saw one of four options: statements attributed to an outlet with a left-leaning audience (The New York Times), a right-leaning audience (Fox News Channel), a mixed audience (USA Today) or no outlet. In these tests, each respondent saw the same outlet for all statements.

When certain language such as “presented as” was used (in test 8), many respondents answered based on how they thought the news outlet was classifying the statement, not how they would classify it. This helped inform the decision to avoid this question wording for the main set of items as well. Otherwise, no additional differences arose when source lines were added.